One of the new features proposed for the next version of the Network Time Protocol is an additional timestamp using a frequency-stabilized clock to enable frequency transfer between servers and clients separated from the normal time transfer provided by NTP. This document demonstrates how it can improve stability of synchronization in a chain of servers.

When synchronizing a clock, there is a compromise between time (phase) accuracy (e.g. how close is the time it keeps to UTC), and frequency accuracy (e.g. how close is the length of its second to the SI second). The frequency of the clock changes on its own due to various physical properties, like sensitivity to temperature changes. The frequency needs to be adjusted not only to correct the frequency error, but also to correct a time error that accumulated due to the frequency error.

For example, if a clock is found to be running 1 millisecond behind the reference, it needs to be adjusted to run slightly faster than the reference in order to catch up with it. If it was adjusted to run faster by 1 part per million (ppm), it would take 1000 seconds to correct the original time error. That would create a frequency error of 1 ppm. If it was faster by 10 ppm, it would correct the time error in 100 seconds, but the frequency error would be 10 ppm. A step correction would be instant, but it would made an infinite frequency error.

This is an issue in network synchronization protocols like NTP. In NTPv4, servers provide clients with two timestamps (receive and transmit) to enable them to measure the time and frequency offset of their clock relative to the server’s clock. The two timestamps are captured by a single clock. This clock needs to be adjusted to correct both time and frequency errors, but there is no way to separate the time and frequency corrections in the protocol, so the frequency error of the server is included in the client’s measurements, which makes it harder for the client to keep its clock accurate, in both time and frequency.

NTP clients can be servers to other clients. There can be up to 15 servers in the chain. The trouble is that the errors in synchronization accumulate in the chain and clients further down have to deal with larger and larger time and frequency errors. To minimize these errors the servers need to slow down corrections of their serving clock, but it’s difficult to figure out by how much. The optimum depends on the stability of the client’s clock, how it controls the clock, what network jitter is there between the server and client, etc. An NTP server doesn’t have that information and it’s likely to be different for each client.

A better solution to this problem is a separate frequency transfer. This has been proposed for NTPv5 in a draft. The protocol is extended to provide the client with a second receive timestamp. The server uses two clocks, one for time transfer and another for frequency transfer, which is more stable as it doesn’t have to be corrected for any time errors. They can be virtual clocks of the NTP implementation, based on the same real-time system clock for instance. There is no need for a second transmit timestamp. The difference between the two receive timestamps is the offset between the server’s clocks at the time of the reception.

The client can use this offset to control the frequency of its clock independently from the time offset, avoiding the issues due to frequency errors in the server’s time-transfer clock. The server doesn’t need to make any assumptions about clients and their clocks, and it can serve directly its best estimate of the true time.

Implementation

As an experimental feature, the frequency transfer is implemented in the

development (pre-4.2) code of chrony. It can be

enabled by adding the extfield F323 option to the server, peer, or pool

directive.

The implementation was straightforward as chronyd already measures and

controls the time and frequency offsets independently and has separate

(virtual) clocks for time and frequency.

An attempt was made to show a proof-of-concept implementation in

ntp. It used the FREQHOLD option of the kernel PLL to

suppress the frequency updates and controlled it independently. It did not work

very well. More significant changes might be needed to implement the feature

properly.

Response test

In the following tests a response to a 10-millisecond time step is observed. Such a large offset is uncommon in normal operation, even over Internet, but it makes the response clearly visible in the noise of other measurements and is mostly deterministic, so the impact of the frequency transfer can be better studied.

In each test, there are four NTP servers/clients synchronized in a chain. A sufficient time is given for their clocks to stabilize and then the 10-millisecond offset is injected on the primary time server (stratum 1). The error of the clocks in the chain (stratum 2, 3, 4) is measured against a reference clock.

The NTP servers/clients are running on Linux as KVM guests on a single machine.

They are running in a minimal configuration specifying just the server with the

minimum and maximum polling interval set to 4 (16 seconds), a drift file, and

allowing the client access. A network jitter of 100 microseconds is simulated

on the host on interface of each guest using the netem qdisc to get a

more realistic network behavior. As the reference clock is used the host’s

free-running system clock, provided to the guests as a virtual PTP clock

(provided by ptp-kvm kernel module). The S1 server has its system clock

synchronized to the PTP clock using the phc2sys program. The S2, S3, and S4

servers use phc2sys only for monitoring, measuring the time error of their

clock once per second, which is what is shown in the following graphs.

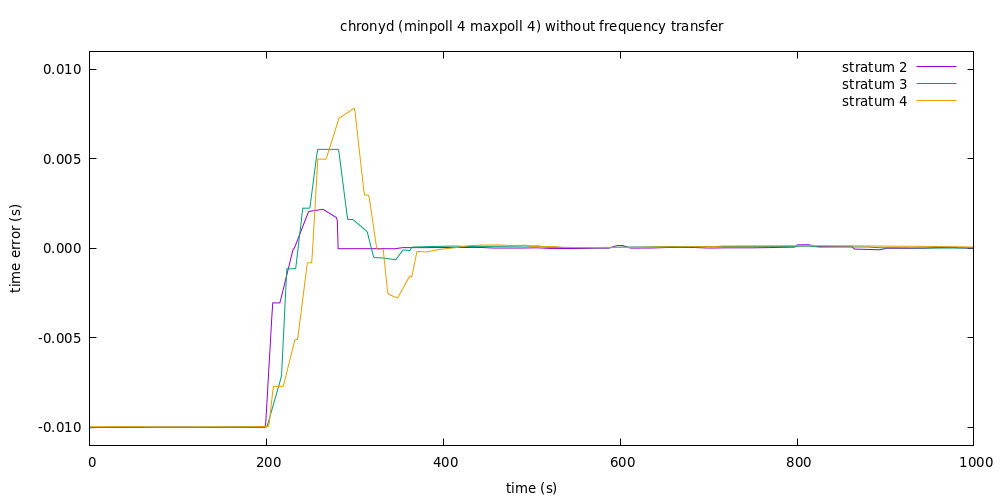

The first graph shows the response of chronyd servers/clients using

standard NTP with time transfer only. The S2 server has an overshoot, but it

recovers quickly. The S3 and S4 servers have an larger overshoot and there

is some oscillation visible. The problematic response clearly grows in the chain.

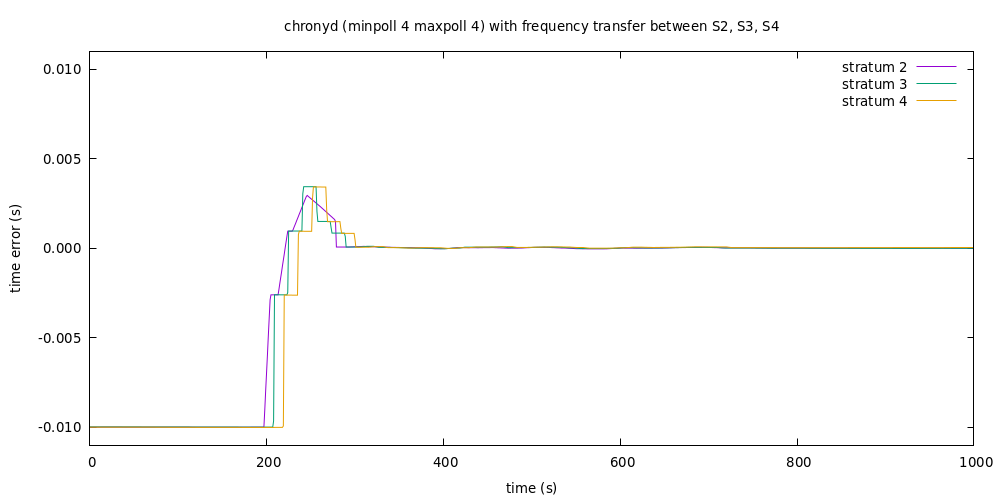

In the second test the frequency transfer is enabled between S2, S3, and S4, but not between S1 and S2. The S2 server has a similar response as in the first test, but the S3 and S4 servers have a much better response, which doesn’t grow in the chain. The stairs in the response of S3 and S4 matching the corrections of S1 indicate the frequency transfer is working well.

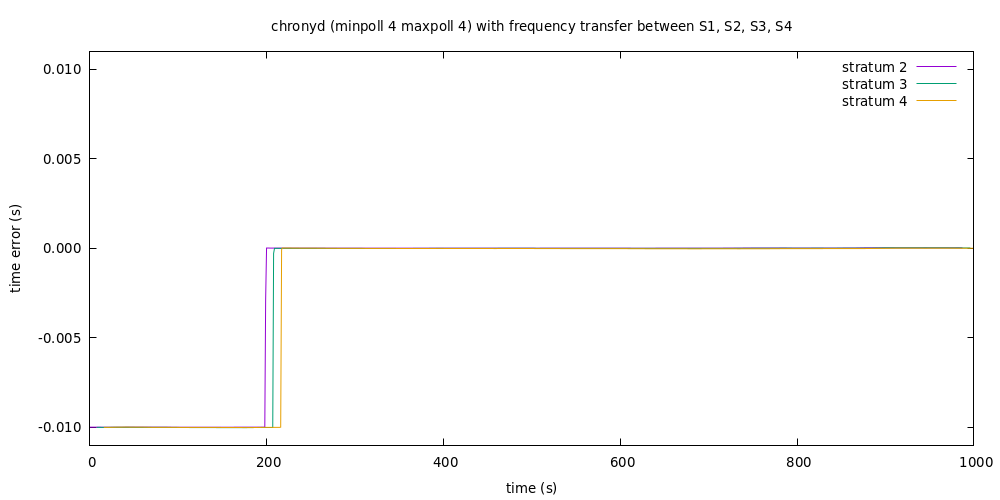

The third test shows the response with frequency transfer enabled between all servers. The response is now perfect, with no overshoot or oscillation visible on any server. There is just a delay in the response due to NTP polling.

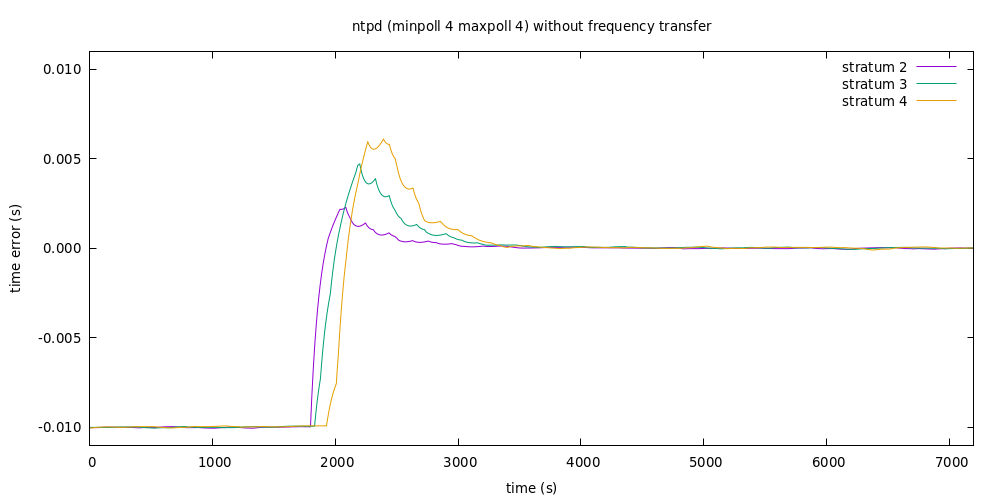

This graph shows the same test as in the first graph, but using ntpd instead

of chronyd. The same issue with the overshoot growing in the chain can be

observed.

Accuracy test

In the following tests the clocks and network are simulated. There are 16 servers/clients synchronized in a chain with no steps in time or frequency. The S2-S16 clocks have a random-walk wander of 1 ppb/s and the network jitter on each link is 100 microseconds with an exponential distribution. The polling interval is 16 seconds.

chronyd is tested without and with the frequency transfer. ntpd is tested

in two configuration of the simulated kernel PLL. One uses the Linux PLL shift

of 2 and the other uses a shift of 4 corresponding to the original "nanokernel"

implementation and other systems.

The following table shows the RMS time error in microseconds relative to the S1 clock measured at one-second interval:

chronyd |

chronyd ext |

ntpd PLL_SHIFT=2 |

ntpd PLL_SHIFT=4 |

|

|---|---|---|---|---|

S1 |

0 |

0 |

0 |

0 |

S2 |

7 |

8 |

36 |

87 |

S3 |

11 |

11 |

53 |

189 |

S4 |

17 |

13 |

88 |

299 |

S5 |

23 |

14 |

189 |

450 |

S6 |

31 |

17 |

371 |

653 |

S7 |

38 |

19 |

719 |

866 |

S8 |

46 |

22 |

1158 |

1062 |

S9 |

57 |

22 |

1527 |

1246 |

S10 |

68 |

23 |

1815 |

1416 |

S11 |

85 |

25 |

2039 |

1584 |

S12 |

97 |

25 |

2447 |

1748 |

S13 |

115 |

26 |

3067 |

1915 |

S14 |

134 |

28 |

3731 |

2077 |

S15 |

156 |

31 |

4419 |

2242 |

S16 |

180 |

32 |

5331 |

2410 |

In these tests the frequency transfer implemented in chronyd reduced the time

error at S16 by a factor of 5. When compared to ntpd, it is about 100x

better. For ntpd, the PLL shift of 2 has an advantage that the error starts

at a smaller value than with the shift of 4, but it grows more quickly and

becomes comparable at S7-S8.

The following table shows the RMS frequency error in ppm from the same set of tests:

chronyd |

chronyd ext |

ntpd PLL_SHIFT=2 |

ntpd PLL_SHIFT=4 |

|

|---|---|---|---|---|

S1 |

0.0 |

0.0 |

0.0 |

0.0 |

S2 |

0.2 |

0.2 |

0.3 |

0.1 |

S3 |

0.2 |

0.3 |

0.4 |

0.2 |

S4 |

0.3 |

0.5 |

0.6 |

0.5 |

S5 |

0.5 |

0.7 |

1.2 |

0.9 |

S6 |

0.9 |

0.9 |

2.2 |

1.0 |

S7 |

1.0 |

1.2 |

3.5 |

1.1 |

S8 |

1.6 |

1.3 |

4.8 |

1.1 |

S9 |

1.7 |

1.4 |

6.8 |

1.2 |

S10 |

2.5 |

1.5 |

8.6 |

1.2 |

S11 |

3.5 |

1.5 |

9.4 |

1.2 |

S12 |

4.7 |

1.6 |

11.2 |

1.3 |

S13 |

6.2 |

1.6 |

13.8 |

1.4 |

S14 |

7.1 |

1.7 |

16.6 |

1.4 |

S15 |

9.0 |

1.8 |

19.4 |

1.5 |

S16 |

11.4 |

1.8 |

24.1 |

1.6 |

The frequency transfer improved the frequency accuracy at S16 by a factor of 6.

It’s now comparable to ntpd with PLL shift of 4, but the time error (shown

in the previous table) is very different.

Summary

Separated frequency transfer in a network synchronization protocol can significantly improve stability of time transfer. Adding support to NTPv5, or possibly as a standard extension to NTPv4, would be a significant improvement of the protocol for some implementations.